PsyLink is experimental hardware for reading muscle signals and using them to e.g. control the computer, recognize gestures, play video games, or simulate a keyboard. PsyLink is open source, sEMG-based, neural-network-powered, and can be obtained here.

This blog details the steps of building it and shows recent developments. Subscribe to new posts with any RSS reader, and join the community on the Matrix chatroom.

- All posts on one page

-

2024-08-16: Nine PsyLinks

-

2024-08-01: Android: First Step

-

2024-07-25: Rev2 Firmware

-

2024-07-24: Prototype Fund

-

2023-08-25: Data Sheets

-

2023-05-31: Prototype 10

-

2023-03-22: Enhanced Signal by >1000%

-

2023-03-06: Sample Signals

-

2023-02-05: 2022 Retrospective

-

2022-02-24: Added Bills of Materials

-

2022-02-23: 3M Red Dot electrodes

-

2022-02-22: Microchip 6N11-100

-

2022-02-16: Next Steps & Resources

-

2022-02-15: Mass production

-

2022-01-19: Prototype 9 + Matrix Chatroom

-

2022-01-18: HackChat & Hackaday Article

-

2021-12-19: Prototype 8 Demo Video

-

2021-12-18: Prototype 8

-

2021-12-16: INA155 Instrumentation …

-

2021-12-15: Power Module 4

-

2021-11-30: Batch Update

-

2021-07-17: Neurofeedback: Training in …

-

2021-07-06: New Frontpage + Logo

-

2021-06-24: Cyber Wristband of Telepathy …

-

2021-06-21: Running on AAA battery

-

2021-06-16: Power Module 3

-

2021-06-10: Believe The Datasheet

-

2021-06-04: Back to the Roots

-

2021-05-31: Website is Ready

-

2021-05-29: Dedicated Website

-

2021-05-17: Gyroscope + Accelerometer

-

2021-05-14: Wireless Prototype

-

2021-05-09: Power Supply Module

-

2021-05-07: New Name

-

2021-05-06: Finished new UI

-

2021-05-04: Higher Bandwidth, new UI

-

2021-04-30: PCB Time

-

2021-04-29: Soldering the Processing Units

-

2021-04-28: Going Wireless

-

2021-04-24: First Amplifier Circuit

-

2021-04-19: Amplifiers

-

2021-04-15: Multiplexers

-

2021-04-14: Data Cleaning

-

2021-04-13: Cyber Gauntlet +1

-

2021-04-11: Adding some AI

-

2021-04-09: F-Zero

-

2021-04-08: Baby Steps

-

2021-04-03: The Idea

Amplifiers

└2021-04-19, by RomanI have the feeling that before building the next prototype, I should figure out some way of enhancing the signal in hardware before passing it to the microcontroller. It's fun to hook the 'trodes straight to the ADC and still get results, but I don't think the results are optimal. So these days I'm mostly researching and tinkering with OpAmps.

Multiplexers

└2021-04-15, by RomanThe analog multipexers (5x DG409DJZ) and other stuff arrived! I almost bought a digital multiplexer, because I didn't know there were various types... But I think that these will work for my use case. The raw signal that I get out of it looks a little different, but when I filter out the low & high frequencies with TestMultiplexer2.ino, the direct signal and the one that goes through the multiplexer looks almost identical =D.

Data Cleaning

└2021-04-14, by RomanThe arduino code now produces samples at a consistent 1kHz. I also moved the serial read operations of the calibrator software into a separate thread so that it doesn't slow down on heavy load, causing the buffer to fill up, and the labeling to desynchronize. I am once again confused and surprised that I got ANY useful results before.

I disconnected analog input pin 7 from any electrode, and used it as a baseline for the other analog reads. By subtracting pin 7 from every other pin, the noise that all reads had in common was cancelled out. Hope this doesn't do more harm than good.

I also connected the ground line to one of the wrist electrodes rather than to the palm, since the palm electrode tended to move around a bit, rendering all the other signals unstable.

And did you know that the signals looks much cleaner when you unplug the laptop from the power grid? :p

I'll finish with a video of me trying to play the frustrating one-button jumping game Sienna by flipping my wrist. This doesn't go so well, but maybe this game isn't the best benchmark :D My short-term goal is to finish level 1 of this game with my device.

Cyber Gauntlet +1

└2021-04-13, by RomanSo if you ever worked with electromyography, this will come to no surprise to you, but OMG, my signal got so much better once I added a ground electrode and connected it to the ground pin of the Arduino. I tried using a ground electrode before, but connected it to AREF instead of GND, which had no effect, so I prioritized other branches of pareto improvement.

I am once again confused and surprised that I got ANY useful results before.

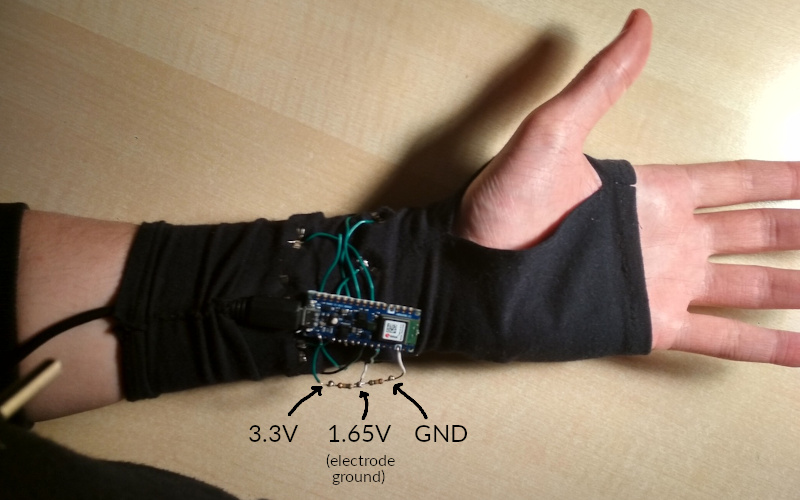

For prototype #3, I moved the electodes further down towards the wrist in hope that I'll be able to track individual finger movements. It had 17 electrodes, 2x8 going around the wrist, as well as a ground electrode at the lower palm. Only 9 of the 17 electrodes are connected, 8 directly to the ADC pins, and one to 1.65V, which I created through a voltage divider using two 560kΩ resistors between the 3.3V and GND pins of the Arduino, so that the electrode signals will nicely oscillate around the middle of the input voltage range.

It all started out like a piece of goth armwear:

Photo from the testing period:

Soldering wires to the electrodes:

The "opened" state shows the components of the device:

But it can be covered by wrapping around a layer of cloth, turning it into an inconspicuous fingerless glove:

If you look hard at this picture, you can see the LED of the Arduino glowing through the fabric, the voltage divider to the right of it, appearing like a line pressing through the fabric, the ground electrode on the lower right edge of my palm, and the food crumbs on my laptop :)

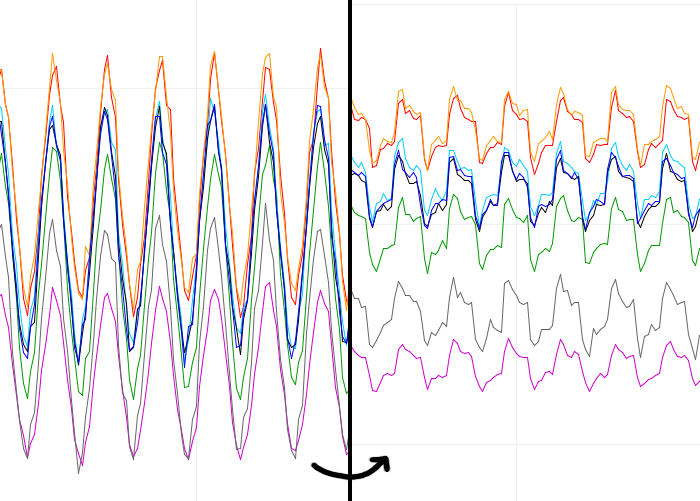

The signal seems to be much better, and as I move my arm and hand around, I can see distinct patterns using the Arduino IDE signal plotter, but for some reason the neural network doesn't seem to process it as well. Will need some tinkering. I hope it was not a mistake to leave out the electrodes at the upper forearm.

I already ordered parts for the next prototype. If all goes well, it's going to have 33 'trodes using analog multiplexers. The electrodes will be more professional & comfortable as well. Can't wait!

Adding some AI

└2021-04-11, by RomanMost neural interfaces I've seen so far require the human to train how to use the machine. Learn unintuitive rules like "Contract muscle X to perform action Y", and so on. But why can't we just stick a bunch of artificial neurons on top the human's biological neural network, and make the computer train them for us?

While we're at it, why not replace the entire signal processing code by a bunch of more artificial neurons? Surely a NN can figure out to do a bandpass filter and moving averages, and hopefully come up with something more advanced than that. The more I pretend that I know anything about signal processing, the worse this thing is going to get, so let's just leave it to the AI overlords.

The Arduino Part

The Arduino Nano 33 BLE Sense supports TensorFlow Lite, so I was eager to move the neural network prediction code onto the microcontroller, but that would slow down the development, so for now I just did it all on my laptop.

The Arduino code now just passes through the value of the analog pins to the serial port.

Calibrating with a neural network

For this, I built a simple user interface, mostly an empty window with a menu to select actions, and a key grabber. (source code)

The idea is to correlate hand/arm movements with keys that should be pressed when you perform those hand/arm movements. To train the AI to understand you, perform the following calibration steps:

- Put on the device and jack it into your laptop

- Start the Calibrator

- Select the action "Start/Resume Recording" to start gathering training data for the neural network

- Now for as long as you're comfortable (30 seconds worked for me), move your

hand around a bit. Hold it in various neutral positions, as well as

positions which should produce a certain action. Press the key on your

laptop whenever you intend your hand movement to produce that key press.

(e.g. wave to the left, and hold the left arrow key on the laptop at the

same time) The better you do this, the better the neural network will

understand wtf you want from it.

- Holding two keys at the same time is theoretically supported, but I used TKinter which has an unreliable key grabbing mechanism. Better stick to single keys for now.

- Tip: The electric signals change when you hold a position for a couple seconds. If you want the neural network to take this into account, hold the positions for a while during recording.

- Press Esc to stop recording

- Save the recordings, if desired

- Select the action "Train AI", and watch the console output. It will train it for 100 epochs by default. If you're not happy with the result yet, you can repeat this step until you are.

- Save the AI model, if desired

- Select the action "Activate AI". If everything worked out, the AI overlord will now try to recognize the input patterns with which you associated certain key presses, and press the keys for you. =D

Results

I used this to walk left and right in 2020game.io and it worked pretty well. With zero manual signal processing and zero manual calibration! The mathemagical incantations just do it for me. This is awesome!

Some quick facts:

- 8 electrodes at semi-random points on my forearm

- Recorded signals for 40s, resulting in 10000 samples

- I specified 3 classifier labels: "left", "right", and "no key"

- Trained for 100 epochs, took 1-2 minutes.

- The resulting loss was 0.0758, and the accuracy was 0.9575.

- Neural network has 2 conv layers, 3 dense, and 1 output layer.

Video demo:

Still a lot of work to do, but I'm happy with the software for now. Will tweak the hardware next.

Now I'm wondering whether I'm just picking low hanging fruits here, or if non-invasive neural interfaces are really just that easy. How could CTRL-Labs sell their wristband to Facebook for $500,000,000-$1,000,000,000? Was it one of those scams where decision-makers were hypnotized by buzzwords and screamed "Shut up and take my money"? Or do they really have some secret sauce that sets them apart? Well, I'll keep tinkering. Just imagine what this is going to look like a few posts down the line!